Technology

Vice president for Product Management Guy Rosen says it intends to engage with civil society organisations like the Media Literacy Council, AWARE and Maruah to see if they would like to participate.

Mr Guy Rosen, vice president of Product Management at Facebook, was in Singapore to share about its preliminary report for enforcing community standards. (Photo: Facebook)

SINGAPORE: Facebook intends to hold a series of public engagements this year to get people’s feedback on its community standards, and Singapore will hold one of these “this year”, according to one of its executives.

Mr Guy Rosen, vice president for Product Management at the social networking company, told Channel NewsAsia in an interview on Tuesday (May 15) that Singapore and India will be the two sites in the region to hold the Facebook Forums: Community Standards event. It intends to engage with civil society players like the Media Literacy Council, Association of Women for Action and Research (AWARE) and Maruah to see if they would be interested in participating, he added.

Their input is needed to help Facebook better “understand the local context” and setting in different markets, the executive explained.

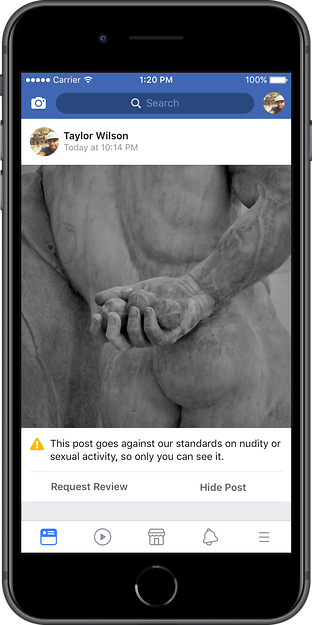

The public forums were announced last month, the same time when Facebook released its internal guidelines for enforcing community standards as well as announcing it will give users the right to appeal decisions if the social network decides to remove photos, videos or written posts deemed to violate community standards.

In it, Ms Monika Bickert, vice president of Global Policy Management, said efforts to improve and refine these standards depend on participation and input from people around the world, and named countries like Germany, France, the United Kingdom and the United States as other countries it intends to hold these forums in.

Citing CEO Mark Zuckerberg, she said then the company won’t be able to prevent all mistakes or abuses “but we currently make too many errors enforcing our policies and preventing misuse of our tools”.

As part of its efforts to address this issue, Facebook released for the first time its preliminary report for enforcing community standards on Tuesday, which highlighted the work it is doing around six violations: Graphic violence, adult nudity and sexual activity, terrorism propaganda, hate speech, spam and fake accounts.

An example of a Facebook post that could have been incorrectly removed and can now be appealed. (Image: Facebook)

TOOLS FOR SELF-POLICING ON FACEBOOK?

It is also stated earlier it is committed to double the capacity of its review team from 10,000 to 20,000 so as to work on cases where human expertise is needed to understand the context or nuance, such as hate speech.

Mr Rosen shared that while there are no review teams based in Singapore, there are people from the content policy team that are based here and are involved in work looking at child exploitation for instance.

Asked if there is a need for a dedicated review team based here to understand local languages such as Singlish, the executive said there are no plans to do so but there are already reviewers within the team capable of understanding these languages.

He added that spreading review teams far and wide – “with a one- or two-men team in a far-flung outpost” – tends to increase enforcement errors. Instead, Facebook’s approach is to have bigger groups residing in “centres of excellence” in order to review the content on its platform, he explained.

On the issue of giving users more tools to police themselves on the platform, such as allowing users to decide turning on the masking screen to indicate graphic violence or nudity, Mr Rosen did not rule out that possibility and said he sees the value of these in certain circumstances.

But these tools “have not been built yet”, he added.